The Ultimate Technical SEO checklist

1. Update your page experience: core web vitals

Google’s new page experience signals combine Core Web Vitals with their existing search signals. These include:

If you need a refresher, Google’s Core Web Vitals are comprised of three factors:

- First Input Delay (FID) – FID measures when someone can first interact with the page. To ensure a good user experience, the page should have an FID of less than 100 ms.

- Largest Contentful Paint (LCP) – LCP measures the loading performance of the largest contentful element on the screen. This should happen within 2.5 seconds to provide a good user experience.

- Cumulative Layout Shift (CLS) – This measures the visual stability of elements on the screen. Sites should strive for their pages to maintain a CLS of less than .1 seconds.

These ranking factors can be measured in a report found in Google Search Console, which shows you which URLs have potential issues:

There are plenty of tools to help you improve your site speed and Core Web Vitals, the main one being Google PageSpeed Insights.

Webpagetest.org is also a good place to check how fast different pages of your site are from various locations, operating systems, and devices.

Some optimizations you can make to improve website speed include:

- Implementing lazy-loading for non-critical images

- Optimizing image formats for the browser

- Improve JavaScript performance

2. Crawl your site and look for any crawl errors

Second, you’ll want to be sure your site is free from any crawl errors. Crawl errors occur when a search engine tries to reach a page on your website but fails.

You can use Screaming Frog or other online website crawl tools–there are many tools out there to help you do this. Once you’ve crawled the site, look for any crawl errors. You can also check this with Google Search Console.

When scanning for crawl errors, you’ll want to…

a) Correctly implement all redirects with 301 redirects.

b) Go through any 4xx and 5xx error pages to figure out where you want to redirect them to.

Bonus: To take this to the next level, you should also be on the lookout for instances of redirect chains or loops, where URLs redirect to another URL multiple times.

3. Fix broken internal and outbound links

Poor link structure can cause a poor user experience for both humans and search engines. It can be frustrating for people to click a link on your website and find that it doesn’t take them to the correct–or working–URL.

You should make sure you check for a couple of different factors:

links that are 301 or 302 redirecting to another page

links that go to a 4XX error page

orphaned pages (pages that aren’t being linked to at all)

An internal linking structure that is too deep

To fix broken links, you should update the target URL or remove the link altogether if it doesn’t exist anymore.

The experts at Perfect Search are always on the lookout for these errors and other issues you identify in this technical SEO checklist on your site. Contact us for a free site audit and we’ll help to identify some quick wins and key focus areas–no contract required.

4. Get rid of any duplicate or thin content

Make sure there’s no duplicate or thin content on your site. Duplicate content can be caused by many factors, including page replication from faceted navigation, having multiple versions of the site live, and scraped or copied content.

It’s important that you are only allowing Google to index one version of your site. For example, search engines see all of these domains as different websites, rather than one website:

– https://www.abc.com

– https://abc.com

– http://www.abc.com

– https://abc.com

Fixing duplicate content can be implemented in the following ways:

Setting up 301 redirects to the primary version of the URL. So if your preferred version is https://www.abc.com, the other three versions should 301 redirect directly to that version.

Implementing no-index or canonical tags on duplicate pages

Setting the preferred domain in Google Search Console

Setting up parameter handling in Google Search Console

Where possible, delete any duplicate content

5. Migrate your site to HTTPS protocol

Back in 2014, Google announced that HTTPS protocol was a ranking factor. Today, if your site is still HTTP, it’s time to make the switch.

HTTPS will protect your visitors’ data to ensure that the data provided is encrypted to avoid hacking or data leaks.

6. Make sure your URLs have a clean structure

Straight from the mouth of Google: “A site’s URL structure should be as simple as possible.”

Overly complex URLs can cause problems for crawlers by creating unnecessarily high numbers of URLs that point to identical or similar content on your site.

As a result, Googlebot may be unable to completely index all the content on your site.

Here are some examples of problematic URLs:

Sorting parameters. Some large shopping sites provide multiple ways to sort the same items, resulting in a much higher number of URLs. For example:

http://www.example.com/results?search_type=search_videos&search_query=tpb&search_sort=relevance&search_category=25

Irrelevant parameters in the URL, such as referral parameters. For example:

http://www.example.com/search/noheaders?click=6EE2BF1AF6A3D705D5561B7C3564D9C2&clickPage=OPD+Product+Page&cat=79

Where possible, you’ll want to shorten URLs by trimming these unnecessary parameters.

7. Ensure your site has an optimized XML sitemap

XML sitemaps tell search engines about your site structure and what to index in the SERP.

An optimized XML sitemap should include:

Any new content that’s added to your site (recent blog posts, products, etc.).

Only 200-status URLs.

No more than 50,000 URLs. If your site has more URLs, you should have multiple XML sitemaps to maximize your crawl budget.

You should exclude the following from the XML sitemap:

URLs with parameters

URLs that are 301 redirecting or contain canonical or no-index tags

URLs with 4xx or 5xx status codes

Duplicate content

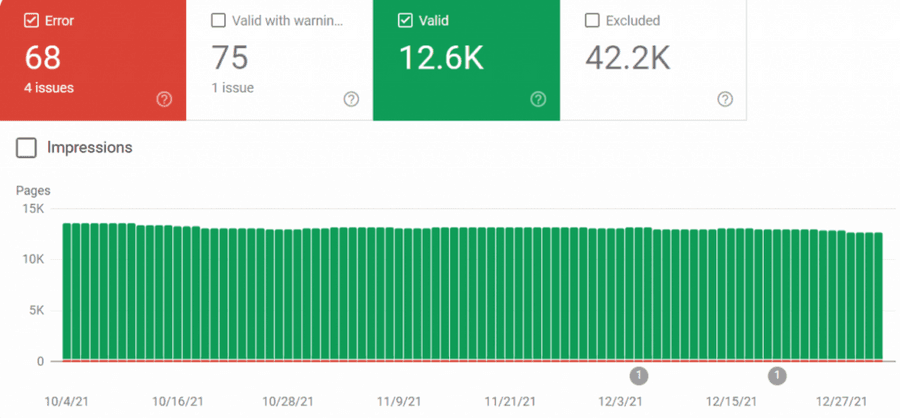

You can check the Index Coverage report in Google Search Console to see if there are any index errors with your XML sitemap:

8. Make sure your site has an optimized robots.txt file

Robots.txt files are instructions for search engine robots on how to crawl your website.

Every website has a “crawl budget,” or a limited number of pages that can be included in a crawl – so it’s imperative to make sure that only your most important pages are being indexed.

On the flip side, you’ll want to make sure your robots.txt file isn’t blocking anything that you definitely want to be indexed.

Here are some example URLs that you should disallow in your robots.txt file:

Temporary files

Admin pages

Cart & checkout pages

Search-related pages

URLs that contain parameters

Finally, you’ll want to include the location of the XML sitemap in the robots.txt file. You can use Google’s robots.txt tester to verify your file is working correctly.

9. Add structured data or schema markup

Structured data helps provide information about a page and its content – giving context to Google about the meaning of a page, and helping your organic listings stand out on the SERPs.

One of the most common types of structured data is called schema markup.

There are many different kinds of schema markups for structuring data for people, places, organizations, local businesses, reviews, and so much more.

You can use online schema markup generators. Google’s Structured Data Testing Tool can help create schema markup for your website.

10. Use this Technical SEO Checklist to review site health regularly

Even small website changes can cause technical SEO site health fluctuations. Internal and external links break if the anchor text is changed on your internal site or the website that you are pointing to. New website pages or organization, site migrations, and redesigns do not always transfer over important SEO aspects like schema markup, sitemaps, and robots.txt, or move them to a place that Google will not recognize. Make a plan to run a crawl of your site and look at each aspect of this checklist any time major changes are made to your website and on a regular schedule to make sure that organic website traffic is not disrupted by a technical SEO issue.

Are you looking for a partner who can take care of your technical SEO?

We’re here to help!

没有评论:

发表评论

注意:只有此博客的成员才能发布评论。